A Hard Disk Drive

HDD, SSD – What?

A hard drive (HDD) is a computer component that utilizes spinning magnetic disks (platters) and read/write heads to store information permanently. Hard drives are what is known as non-volatile storage, meaning that the information is retained after the power is removed, such as when you turn your computer off.

Hard drives have existed in mainstream computers for a long time, and they pretty much shape our views of computer storage – or at least they did until now.

Hard drives recently encountered a new opponent – solid state drives, or SSDs. An SSD is essentially a large amount of flash memory (the same type of memory in camera cards and USB flash drives) stuffed into a package that looks like an HDD. That way you can theoretically use an SSD anywhere you can use a hard drive. But what’s the difference?

SSD or HDD?

SSDs were developed to replace HDDs and resolve some of the issues that HDDs have. First of all, HDDs have spinning platters and mechanical heads which read/write data. This creates noise and heat, as well as makes the drives very fragile. They also consume a relatively large amount of power, and due to their mechanical nature, they break down easily. However the largest area of improvement is performance. An average hard drive can read/write at approximately 100MB/s, and have an access time of around 15ms. Access time is the time it takes for the drive to seek to an area of the disk and retrieve some information. After reading that, who would want such a horrible piece of technology? Well, there are upsides too. HDDs have enormous storage capacities, ranging up to 3TB (3000GB). They also can be read/written to an unlimited number of times, and can retain their information for absurd lengths of time. They are also abominably cheap; a 1TB (1000GB) HDD will only cost about $70!

SSDs on the other hand have solid state flash memory, which means that all the information is stored on a chip (or series of chips) which have no moving parts. This means less power consumption and makes them more resilient to drops and vibration. The main reason for using an SSD is performance. A typical SSD can read/write around 200MB/s and since there are no moving parts, they have an almost instant access time. This sounds great, so why doesn’t everyone have one? SSDs still produce heat, in fact they sometimes produce more heat than HDDs. They are also unpredictable in terms of failures. SSDs today die at random times, and while there are tools to help predict failures, they don’t always work. (This technology, known as SMART, also exists for HDDs, but is far more advanced.) SSDs also wear out after so many read/write cycles, so their longevity is not so great. The other main points are price and capacity. You can get a 1TB HDD for about $70, while the same capacity SSD will cost upwards of $2,200!!!

The Solution – RAID

Chances are that you have an HDD in your computer, unless you’re rich. So what can you do to get SSD performance with HDDs? The answer is simple – RAID. RAID stands for Redundant Array of Independent Disks. RAID is a technology that allows multiple (2 or more) standard off-the-shelf HDDs to operate together in what is known as an array. There are many forms of RAID, known as levels. Of these levels, there are 2 types: Standard and Extended. Standard levels are numbered 0 through 7, and all extended levels are made by combining standard levels. So what does RAID do? Well that depends. Each level is designed to do something different. For example level 1 is known as mirroring. It requires 2 or more drives. In this mode your computer will store information to each drive simultaneously. In the event that a drive fails, not only is the data safe on the other drive, but the system can continue to operate as if nothing has happened. While that will help protecting your data, it won’t improve performance.

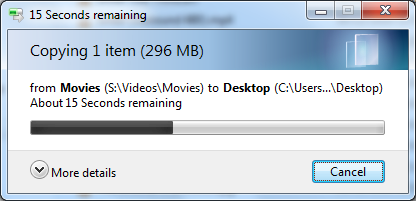

RAID 0 is known as striped storage. Imagine that you have Lego blocks stacked on top of each other alternating colours: red, blue, red, blue…etc. Now think of the blocks numbered from top to bottom starting at 1. Now imagine that each block is a chunk of data and the tower represents a hard drive. The drive will read the top block first, then the next and so forth (following the numbers) at a predictable speed of lets say 100MB/s. Now imagine taking all the blue blocks and making a tower of blue, and a tower of red. So since the numbers stay the same, lets say that block 1 is on the red tower, and block 2 is now on the blue tower and then back to red etc. In this case each tower is a separate drive. When asked to read data, each drive can read 1 block at a speed of 100MB/s in parallel. Since there are 2 drives, and 100MB/s of data in streaming out of both drives, the actual read speed is 200MB/s. In this configuration, each drive stores half of the data. If 3 drives existed, the speed would theoretical triple and this could go on and on. While the math suggests a speed of 200MB/s, the actual speed usually comes to around 250-350MB/s, as the RAID array changes the way access times affect the transfer and other factors. What is even better is that the sizes of the drives are combined, so two 1TB drives would create a 2TB array in RAID 0. So if we bought 2 drives, we could create a 2TB RAID 0 array that is significantly faster than an average SSD for around $140, rather than $6,000 for an equivocally sized SSD.

I personally like to use RAID level 10. That is levels 1+0. It uses a minimum of 4 drives, and creates 2 striped arrays for performance, and then mirrors them for data security.

RAID Sounds Awesome! How Do I Get It?

RAID is a technology, not a device. As I stated before, it is a way of using regular drives in special ways. The first criteria is that you must have a computer that supports more than one drive. Most (if not all desktops) do, but most laptops do not. If you do however have a desktop, the first order of business is to get yourself a second (or maybe third) hard drive and install it. Installation is not very hard, so don’t fret. One important note is that when you buy a drive, get one that is the same size as the drive you already have. RAID arrays can work with different sized disks, however the size of the array is restricted by the size of the smallest drive, so if you buy a larger or smaller disk, you may have wasted space. Once that’s done, you need to choose what type of RAID you want to use. There are 3 types: Hardware RAID, FakeRAID, and Software RAID. Hardware RAID is the best. It is supported by all operating systems and the performance is optimal. The only downside is that you would have to purchase a physical device know as a RAID controller and install it in your computer, and they run around $300. Software RAID is most likely what you would go with. This type uses a program in your operating system to manage the array(s). Performance for software RAID is comparable to that of hardware RAID, although support depends on your operating system. Those who use Windows or Linux should be just fine. FakeRAID is the last resort. It is a cross between hardware and software. It is like hardware because arrays can be partially set up outside the operating system (in the BIOS for example). FakeRAID however still uses software on the operating system to control the arrays. The performance of FakeRAID is not as good as true software RAID because the drivers usually are not optimized for the operating system as much as software RAID drivers. FakeRAID is only useful if you have multiple operating systems on your computer, and need support for the same arrays in both operating systems. Since FakeRAID is partially built-in to the computer, your computer must support FakeRAID in order to use it.

In a later post I will talk about setting up RAID arrays in Ubuntu Linux using a software known as mdadm.